Talk To Us

Radically accelerate your roadmap with Tangram Vision's perception tools and infrastructure.

Radically accelerate your roadmap with Tangram Vision's perception tools and infrastructure.

Table of Contents

In part one of our deep-dive into event cameras, we investigated the history and architecture of these amazing sensors, as well as the surprising trade-offs one might have to make when using them. But that's only one side of the story. How do event cameras compare to the Common Sensors: conventional cameras, depth, and LiDAR? How useful are these sensors in autonomy? How can we use them today, and if we can't, what's the big hold-up?

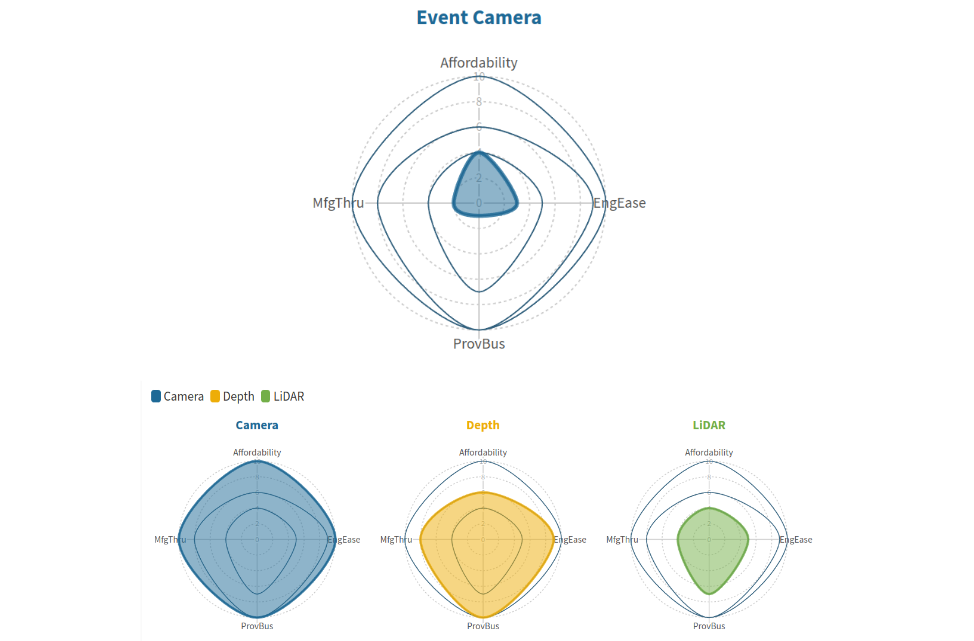

Let's break it down using my handy and very official Technical and Commercial Metrics from the intro Sensoria Obscura post, starting with a Technical Evaluation.

Due to their neuromorphic nature, event cameras are by far some of the most robust sensors on the market. One will receive meaningful signal in nearly any condition: nighttime, daytime, extreme contrasts, it doesn’t matter. Every pixel is its own sensor, so as long as there’s a meaningful change to report at that exact pixel, one will receive it. Not only that, but the signal comes through nearly instantaneously, which allows for near-constant reactivity from an autonomous program. These unique attributes combined make event cameras a viable autonomy sensor. It shines so far and ahead of any other sensor in this category that its other deficits are nearly made up for.

Sadly, the deficits are there. Event cameras are just cameras, at the end of the day; no matter the tech underlying the sensor, event cameras are still crammed into the same form factor as a conventional CMOS sensor. This means that their field of view + range + fidelity aren’t ever going to surpass the qualifications of that form factor.

We also know from our breakdown in Part I that event cameras are cursed with Too Much Data. Paradoxically, as the fidelity of our sensor goes up, the throughput rises so dramatically that communication speed suffers. This means there is a balance one must strike between camera resolution and throughput that’s much more serious than anything one would have to worry about with a 60fps high-res CMOS camera. For the time being, applications that want to use event cameras are “stuck with” lower-resolution solutions. I want to re-emphasize that this is not a bad thing (see [3]), but it certainly is a restriction to keep in mind.

Before I did much research into event cameras, I would have wished anyone working with them the best of luck; they weren’t going to have much help otherwise. I have been happily proven wrong. There is a core group of academia pushing the state of the art, slowly but surely, and publishing their infrastructure, algorithms, and results for the world to use. Those who see the promise of event cameras in robotics and autonomy are willing to put in the time and help others get started.

💡 Many of these resources and their applications are listed in the technical appendix at the end of this article.

That being said, all I found only convinced me to raise my score for Engineering Ease from a 1/10 to a resounding 3/10. The passion is evident in these open-source libraries, but they still rely on a base of knowledge that few possess. As we explored in Part I, there are no less than 8 common ways of interpreting event camera data. Even if an engineer can produce all 8, how will they understand what formats are best in which situations? How can they engineer around the steep throughput requirements necessary to gather that data in a lossless fashion in the first place? These problems are approached in open-source solutions, but not solved.

Anecdotally, there is only one instance of an event camera I’ve ever seen used commercially: the Samsung SmartThings Vision. This DVS was a part of Samsung’s line of security and smart home devices, specifically marketed as bringing “security that respects your privacy” as it could only record outlines. It was only ever sold in Australia, Europe, and Korea, and never did make its way to the USA (luckily, the Tangram founders did manage to buy one from Australia way back when). From forum reports, it seemed extremely finicky; there were constant false alarms and incorrect classifications that made it a hassle to use in any busy part of the house. That being said, it was a bold first step in applied event camera tech.

Since then… well, not a lot has happened since then. A cursory search for applications in commercial devices doesn’t show much; most applied event camera tech is still being used for tech demonstrations. This is in no small part due to how difficult they are to obtain.

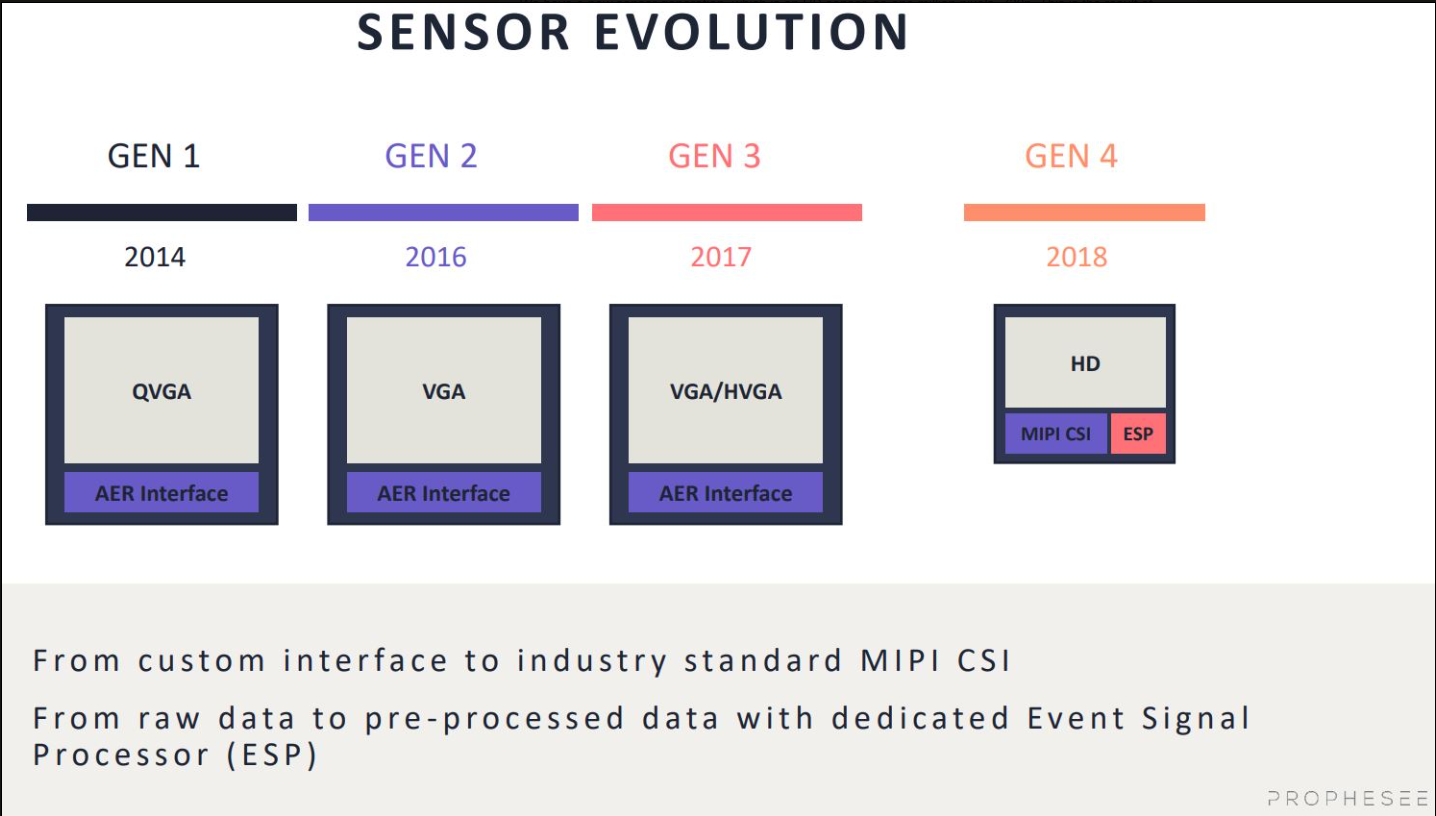

Prophesee does most of their development in collaboration with Sony, who have their branding on most of the chips now offered by Prophesee. The pair are slowly building higher and higher resolution cameras, with some of their latest being full HD resolution. As we’ve discussed in Part I, this kind of resolution can be more of a hindrance than an advantage, especially in dynamic environments. However, Prophesee’s Gen 4 sensors contain something they call an Event Signal Processor, or ESP. As quoted from the Prophesee docs, ESP claims to do a few things to keep Mev/sec at a reasonable level:

The partners also offer a sort of SDK for their event camera line that they refer to as Metavision Intelligence, which includes the “largest set of Event-Based Vision algorithms accessible to date”. I have personally never interacted with the software, but if marketing is any indication, it can serve your needs… whatever those needs might be.

For those interested in trying out the hardware: their EVK4 development kit is available for purchase, providing you’re willing to talk to a sales rep first.

As covered above, Samsung is the only company that I know of that has actually had a commercial use case for event cameras, so it follows that they would have an event camera line for sale. This… isn’t actually true, to my knowledge. The Samsung SmartThings Vision is no longer manufactured or supported, and there hasn’t been a standalone camera produced since.

That being said, Samsung continues to iterate and improve the technology. According to slides shared at Image Sensors World, the company was iterating on version 4 of their sensor as of January 2020. Can one be purchased? I really don’t know!

.png)

According to a published presentation by Dr. Davide Scaramuzza of ETH Zurich, the first commercial event camera was created in 2008 under the name “Dynamic Vision Sensor”, aka DVS, by iniLabs. Since then, iniLabs’ commercial endeavors have become IniVation, which currently sells several models of event camera on their website. The DAVIS346, which is used in research papers here and there, will cost a cool 4900 euros at the commercial rate with a 500 euro discount for those in academia.

IniVation stands out as one of the few companies with an event camera module that feels most commodified. Its form factor is clean, communication procols are standard, and it has a real price attached; I don’t need to talk to a representative to get one. For those trying to get their hands on any event camera at all, this seems most approachable (assuming you have 4900 euros ready to go).

CelePixel has been a name in event camera manufacturing for some time. Given Omnivision’s omnipresence in the camera field, it makes sense that Will Semiconductor (Omnivision’s parent company) would make an acquisition in the event camera space sooner or later.

CelePixel has a few different versions of event cameras, with some of them approaching HD resolution. A presentation from the partnership can be found online here where they discuss different resolutions and capabilities of their event camera lines. CelePixel is also one of the only companies that has made their camera repositories open source (though the repositories don't seem entirely developer-friendly). Find those resources in the Appendix at the end of the article.

Insightness does not give a lot of primary information about their company or product online. However, their Silicon Eye event camera can be found in a few academic labs around their native Zurich. A link to a conference talk at CVPR about their Silicon Eye 2 here.

💡 Are you an event camera manufacturer that we missed? Let us know!

For all of their current setbacks, I still hold a lot of hope for event cameras. They truly are capable of sensing things that no other sensor could, at speeds that are currently unimaginable for this kind of visual data. It just comes down to how that data is used that makes the difference.

Without guidelines or general rules of play, I believe most engineers would revert to just treating event camera data like any other camera, albeit a powerful one. That might deliver some quick algorithmic wins, but it really just scratches the surface of what event cameras are capable of. In the hands of an expert, they can do some crazy stuff. Check out this demonstration (from 2017!) from RPG @ UZH [5]:

Cool demos or no: until we’re all experts, or we have APIs that abstract away the hard decisions, event cameras won’t get their chance to shine alongside the Common Sensors of note in autonomy: cameras, LiDAR, and depth. Lucky for us, it seems It’s only a matter of time.

…and maybe some help from Tangram Vision.

This covers sources across both Parts I and II.

Tangram Vision helps perception teams develop and scale autonomy faster.