Talk To Us

Radically accelerate your roadmap with Tangram Vision's perception tools and infrastructure.

Radically accelerate your roadmap with Tangram Vision's perception tools and infrastructure.

Table of Contents

One benefit of building sensor software for autonomy, rather than an autonomous platform itself, is that we get to observe a broad cross-section of perception design approaches across an entire industry. For instance, we’ve observed that solid state LiDAR appears to be gaining ground against spinning, mechanical units. We’ve seen more companies adopting off-the-shelf HDR cameras from ADAS systems into their own perception arrays. And, most recently, we’re seeing quite a few teams testing — and subsequently adopting — thermal cameras. Of all the modalities that we consider to be “Sensoria Obscura”, it appears that thermal may be the next breakout contender.

We’ll assume that most readers understand the principles behind thermal cameras. But, for those who don’t, here’s the quick primer: in design and construction, a thermal camera is not too dissimilar from a typical CMOS camera. But, instead of each pixel recording a different intensity in the visible light spectrum, each pixel records intensity in the infrared spectrum. In doing so, they can visualize an object based on the heat it emits, rather than the light it reflects.

So why are autonomy companies experimenting with (and increasingly adopting) thermal cameras as part of their sensor arrays? It turns out there are a number of strengths that naturally lend themselves towards the needs of autonomous vehicles and robots.

ADAS and autonomy relies on sensing arrays that can capture data on environments, objects, and events under different circumstances and conditions. For those that operate in broader operational design domains (ODD) such as highways and city streets, that array of circumstances and conditions can be vast. Therefore, as ADAS and autonomy has evolved, it has become important to use multiple modalities simultaneously to minimize scenarios where an autonomous agent may be completely unable to sense due to sensor failures. As such, a “holy trinity” of perception sensing modalities has emerged to provide sufficient data under most of the conditions that are encountered in urban and highway ODDs. This trinity is familiar to anyone in the autonomy space: cameras, LiDAR, and depth (what we refer to as the “Common Sensors” here at Tangram Vision). For those autonomous vehicles that operate on roadways, we can also add radar as an honorary Common Sensor as well.

Collectively, these modalities provide a mix of near- to long-range visual and 3D data, and they do so under many circumstances. But “many” is not “all”. This is where thermal cameras have generated interest in robotics and autonomous vehicles.

The Common Sensors identified above provide sufficient data for an autonomous agent to operate in a vast majority of conditions. However, if that agent is to deliver on its promise of efficient, continuous operation, it must be prepared to operate under edge case conditions that can flummox the Common Sensors.

Visible light fades as day turns to night, and with it so does the efficacy of most cameras (even HDR cameras, which are still reliant on ambient light). But since thermal cameras don’t record in the visible light spectrum, this is a scenario where they can shine (or…burn brightly?). Consider the following: in nighttime lighting conditions, a thermal camera can identify objects that are 4-6X further away than a standard RGB camera that is aided by car headlights. This is doubly important for AV and ADAS systems that use training datasets and AI to inform their behavior. If a system is able to more quickly ascertain that an upcoming object should be classified as a pedestrian, it can respond appropriately well before the distance between it and the object in question has closed too much.

Here in Northern California, the presence of fog is common. When fog is present, it can blind not just cameras and depth sensors, but LiDAR as well. That’s because even moderate fog can scatter signals in the visible light spectrum. As thermal cameras typically operate with either a 3-5μm wavelength (midwave infrared, or MWIR), or an 8-12μm wavelength (longwave infrared, or LWIR), they are more robust to foggy conditions. With that said, we need to dispel a common misconception. Thermal cameras aren’t 100% robust to fog. In fact, a thermal camera’s capability to sense in fog is largely dependent on the thickness of the fog, and the wavelength used by the thermal camera. MWIR is less suited to cutting through foggy conditions to capture a signal, whereas LWIR is more capable.

The “imaging” chip on a thermal camera includes an array of pixels just like an RGB camera - except, because of the larger wavelengths of infrared frequencies, the pixels are larger, and therefore thermal cameras have a limit on resolution that is far below that of RGB cameras.

As a result, integrating a thermal camera into your platform’s sensor array isn’t too different than adding any other kind of camera. But there are differences, most notably with how thermal cameras capture data, and how they are calibrated.

Throughout this article, we’ve noted that thermal cameras operate in the infrared spectrum, whereas a more typical camera such as an RGB camera works in the visible light spectrum. This difference has implications on both the performance of these different cameras, as well as their technical design.

Because infrared waves are longer than waves in the visible light spectrum, the size of the pixels that record values are larger on a thermal camera than on an RGB camera. As a result, thermal cameras typically record at a lower resolution than an RGB camera, as fewer pixels can be fit into the same chip size. Similarly, the longer IR wavelength can require a longer exposure, which can then create limitations in camera performance. One limitation is the presence of artifacts in fast moving scenes. The other is an inability to operate at higher frame rates. While it is possible to buy thermal cameras that operate at 30 or even 60 fps, many manufacturers recommend lower frame rate settings to compensate for these limitations.

As a result, registering thermal camera output with other sensor output requires taking into consideration these unique characteristics and their impacts on data.

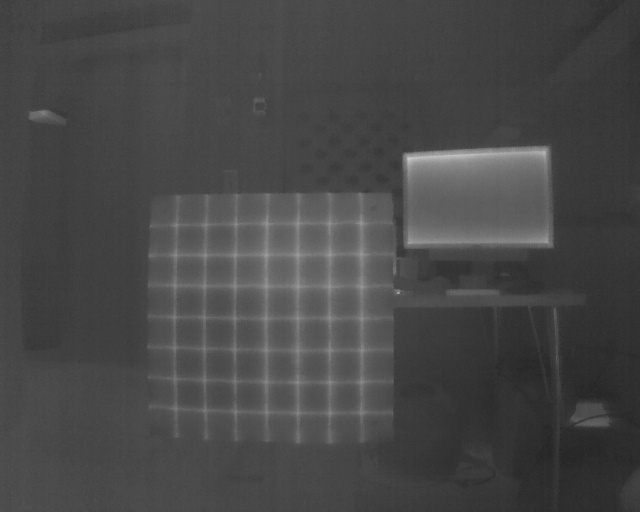

Because we’re discussing adding thermal cameras to sensing arrays, it makes sense to explore the extrinsic calibration of these cameras with the other sensors that might also be used. As you may have already guessed, a standard camera calibration fiducial will not work out of the box with a thermal camera. But with a few tricks, it can.

Fiducial targets typically feature a black pattern on a white substrate. These targets can be heated with a heat lamp, and the black printed portions will absorb more heat than the white portions. When sufficiently and evenly heated in this manner, these targets can then be used simultaneously for both thermal cameras and other cameras. Note, however, that the manner in which a fiducial is heated could create localized hot spots that can impede a calibration process. Therefore, it’s imperative that the heating source used be able to heat the entire target evenly.

Once data streams and calibration have been determined, fusing a thermal camera stream with other sensor streams is no different than for integrating any other modality. Sources need extrinsic calibration and time synchronization to ensure that data delivered from all sensors agree in time and space. As noted above in “Differences In How Thermal Cameras Capture Data”, many thermal cameras output data at a low fps. Therefore, timing synchronization may need to take into account that other sensors will be transmitting data more frequently than a thermal camera.

If you’re now convinced that a thermal camera is a worthy addition to your autonomous platform’s sensor array, then you have a limited (but growing) selection to choose from. As of now, there are two established manufacturers and three upstarts looking to bring their cameras to robotics and autonomous vehicles.

Teledyne/FLIR has been making thermal cameras for nearly half a century. As such, they offer one of the widest arrays of thermal cameras of any sensor manufacturer. FLIR offers their IP67-rated ADK™ specifically for autonomy companies to rapidly test and assess thermal imaging’s suitability as part of an autonomy or ADAS sensing array. The ADK’s resolution is 640 x 512.

The ADK has configurable frame rate, outputting data at either 30 Hz or 60 Hz (9 Hz is possible as well). The ADK itself is available with four data transfer types: FPD-LINK III, USB-C, GMSL1, or GMSL2. Likewise, the ADK is available with four different horizontal FOVs: 24°, 32°, 50°, or 75°. FLIR’s convention is to match telephoto-like lenses to narrower FOVs, which means that longer range applications will by default require a narrow FOV selection.

The public datasheet for the FLIR ADK can be found in our sensor datasheet library.

Like FLIR, SEEK Thermal is, uh, seeking to get into the autonomy space with a development kit for their MosaicCORE sensor that available in a number of configurations.

The starter kit is available in two different resolutions with a variety of HFOV options: 200 x 150 (15°, 21°, 35° or 61°) or 320 x 240 (24°, 34°, 56°, or 105°, the latter with vignetting). In all cases, the starter kit uses micro USB for communication.

Starter kits are available under $400, making SEEK’s options among the cheaper available to test and experiment with this modality. The public datasheet for the SEEK Thermal MosaicCORE Starter Kits can be found in our sensor datasheet library.

Owl has specifically focused their sensor design for the autonomous vehicle and ADAS market. What makes their sensor unique is the application of deep learning and proprietary optics that allow it to deliver a high definition depth map. Therefore, Owl claims that they can outperform LiDAR and radar along those modalities’ traditional strengths, while also adding thermal imaging’s greater resilience to low-light and occluded environmental conditions.

Another newcomer, Adasky’s thermal camera modules have also been specifically designed for autonomous vehicle and ADAS applications. Adasky builds their entire system in-house, including both the software and hardware. Like FLIR and SEEK, Adasky’s thermal cameras are available in a wide range of horizontal FOV configurations so that they can be tuned for different use cases.

Foresight’s thermal solution uses an array of two thermal cameras to create a wide baseline stereo setup that allows it to capture 3D thermal data at long ranges. Part of what makes Foresight’s system unique is the ability for the two cameras to automatically set and maintain extrinsic calibration with each other to maintain optimal performance.

FLIR has a large dataset for using thermal data for training an ML system. It contains 26,000+ annotated frames featuring people, cars, traffic signs, and other classified objects that are typically encountered by autonomous vehicles and ADAS systems. The free dataset can be downloaded here (note: you must submit personal information to access the dataset).

University College London has produced two large datasets (one with 14,000+ frames, the other with 26,000+ frames) using thermal imaging to classify different materials.

Tangram Vision’s own datasheet library includes material for SEEK Thermal and FLIR systems, with no need to fill out a form or provide personal information.

So is thermal the hot new modality for autonomous platforms and ADAS? Judging on the amount of development activity, as well as the interests of the companies we speak to, our prediction is that we’ll see it as part of sensing arrays with increasing frequency. To that end, thermal cameras are already supported in the Tangram Vision Platform, as we have partners who actively use this modality now. If you’re curious about adding a thermal camera to your own array, and have questions around integration, calibration, and fusion, we’re always happy to chat! And if you enjoyed this post, please subscribe to our newsletter, follow us on Twitter, and follow us on LinkedIn to get notified when we launch our next post.

Tangram Vision helps perception teams develop and scale autonomy faster.